Mass Univariate ERP Toolbox: general advice

Home/Download Tutorial Revision History Contact/Questions Appendix Other EEG Analysis Software Packages

Tutorial Sections

- Installing the Mass Univariate Toolbox

- Creating GND variables from EEGLAB set or ERPLAB erp files

- Within-subject t-tests

- Between-subject t-tests

- General advice<-You are here

General Advice on Applying Mass Univariate Analyses

1. What should I do first?

Although mass univariate tests allow you to correct for multiple comparisons without sacrificing undo power, it can still hurt the power of your analysis to do lots of comparisons. Thus, it still pays to use a priori expectations about the experimental results to constrain your analysis. Here are some ways to decrease the number of comparisons/increase power:

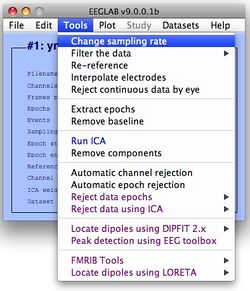

- If you're applying a tmax permutation test procedure, reduce the sampling rate of your data (i.e., "decimate" your data) to match the smallest duration effect you expect. For example, if you recorded your data using a sampling rate of 250 Hz you could decimate your data down to a sampling rate of about 125 Hz. Going from 250 Hz to 125 Hz effectively reduces the number of comparisons by a factor of 2 (i.e., you have a sample every 8 ms instead of every 4). Any ERP effects in your data probably last longer than 4 ms, thus you will probably find the same effects in your data at 125 Hz that you would find at 250 Hz, and the effects will be more likely to be significant. You can decimate your data in a GND variable by using the MATLAB function decimateGND (enter >>help decimateGND into MATLAB for an example). You can also decimate your data via the EEGLAB GUI by using the "Change sampling rate" option under the "Tools" menu:

If you're applying a false discovery rate (FDR) control algorithm to your analysis, changing the sample rate probably won't have much of an effect. That's because FDR control depends on the proportion of comparisons that are significant and changing the sampling rate a bit probably won't affect the proportion of significant comparisons (i.e., it will just affect the number of significant comparisons). See Yekutieli & Benjamini's (1999) brief discussion of data resolution and FDR controls for more on this issue. Likewise, if you're applying a cluster-based permutation test changing the sampling rate a bit is unlikely to have much of an effect since it is unlikely to greatly affect cluster mass rankings.

- First test for differences in a priori specified time windows (e.g., a 300-500 ms N400 window), averaging within each time window. This will greatly increase your power to detect expected effects. Once you've tested the time window(s) where you expected effects, test all time points of interest (e.g., 100 to 900 ms). This will allow you to detect unexpected effects, though the power of the analysis may be relatively weak.

- Remove electrodes from your analysis where you don't expect effects (e.g., ocular electrodes, A2). If the signal-to-noise ratio of your data is quite low (e.g., you have few subjects and few trials per bin), you may want to include only those electrodes where you expect an effect to be most reliable (e.g., electrode Pz for a P300 effect). Alternatively, you could replace multiple electrodes (e.g., all the left frontal electrodes) with their average. Currently, we don't have MATLAB code that supports this.

- Exclude periods of time during which it is unlikely for effects to occur (e.g., between 0 and 50 ms following a visual stimulus).

2. Special considerations for permutation tests when your sample size is small

When all possible permutations are considered in a permutation test, permutation tests p-values are exact (i.e., they are not potentially inaccurate estimates). Thus permutation tests are accurate tests even when sample sizes are small. This is a strength of permutation tests over related methods such as bootstrap resampling or the jacknife that can be quite imprecise with small sample sizes.

However, with permutation tests a finite set of p-values are possible (at most the number of possible permutations) and with small sample sizes (e.g., less than 7 participants for a one-sample/repeated measures test, less than 5 participants per group for an independent samples test) the number of p-values can be very limited. When this occurs the desired family-wise alpha level might be impossible to achieve and you might be forced to use a much less or more conservative alpha level than you would like. For example, if you performed a two-tailed repeated measures test with only 3 participants the most conservative alpha level you could use would be 0.25. In such situations, it may be better to use an alternative method for multiple comparison correction like an algorithm for false discovery rate control or the Bonferroni-Holm procedure for family-wise error rate control.

Note, the code for performing permutation tests (tmaxGND, tmaxGRP, clustGND, and clustGRP) will provide you with an estimate of the true alpha level of each test. If the estimated alpha level test differs significantly from your desired alpha level, your sample size is too small (or you're using way too few permutations).

3. Practical tips on interpreting the results of FDR control

FDR control is most clearly useful for exploratory analyses. Given a pilot data set, FDR can identify what effects might be present in the data and you don't have to be too concerned about possible FDR failings. Subsequent experiments can use these exploratory results to limit the number of hypotheses tests and can see if the effects are indeed reliable using procedures that are guaranteed to provide strong control of family wise error (e.g., a tmax permutation test).

FDR control may be useful for non-exploratory analyses of data (especially of data that are difficult/expensive to replicate, like patient data). However, keep the following caveats in mind when interpreting FDR control results:

- Is FDR control guaranteed with this FDR procedure and this type of datum? Many FDR methods are only guaranteed to control FDR when the dependent variables are independent or when the sample sizes are sufficiently large (for methods that have to estimate parameters from the data).

- Be wary of effects that consist of only a small percentage (the nominal FDR level or less) of the total rejections. Such effects could be composed entirely of false discoveries.

- Keep in mind that FDR controls limit the AVERAGE proportion of false discoveries. In any single experiment, the proportion of false discoveries might be much greater than the average. For example, Troendle (2008) cites an example of a hypothetical data set for which an FDR control method would find 29% or more false discoveries 10% of the time. Indeed, because of this potential problem, Troendle advocates the use alternative multiple testing controls instead of FDR procedures.

- Don't include known effects in your analyses as they will increase the number of false discoveries. For example, say you perform an N400 experiment and you are primarily interested in finding pre-N400 effects. You should apply FDR control to tests before the N400 (where you're not sure there will be any effects). If you include the N400 effect in your analysis, you'll have a lot of significant comparisons in the N400 time window, which will increase the number of false discoveries in the part of the data you really want to know about.

- Troendle, J.F. (2008). Comments on: Control of the false discovery rate under dependence using the bootstrap and subsampling. Test, 17, 456-457.

- Yekutieli, D. & Benjamini, Y. (1999). Resampling-based false discovery rate controlling multiple test procedures for correlated test statistics. Journal of Statistical Planning and Inference, 82, 177-196.