CH391L/S13/DNA Computing

Introduction

DNA Computing is the performing of computations using biological molecules, and DNA specifically, instead of traditional silicon.

The advantages of DNA over silicon include:

- Massive parallelism: tasks can be performed simultaneously, rather than linearly in silicon computers.

- Size: DNA computers are many times smaller than silicon computers.

- Storage density: much, much more information can be stored in the same amount of space.

The disadvantages of DNA over silicon include:

- Impracticality: the quantities of DNA required to take advantage of its massive parallelism are unmanageable.

- Error prone: getting biochemistry to perform as desired is still more difficult compared to silicon and electricity.

- Speed: it still takes a long time to get your results, from start to finish, even though the computational step may be relatively rapid.

The concept of using molecules in general for computation dates back to 1959 when American physicist Richard Feynman presented his ideas on nanotechnology, but it took until 1994 for the first successful experiment using molecules for computation to be completed.

History

A computer scientist at the University of Southern California named Leonard Adleman first came up with the idea of using DNA for computing purposes after reading "Molecular Biology of the Gene," a book written by James Watson (co-discoverer of the structure of DNA).

In 1994 Adleman published an article in the journal Science[1] that proposed how DNA could be used to solve a well-known mathematical problem, called the directed Hamilton Path problem, also known as the "traveling salesman" problem. The goal of the problem is to find the shortest path between a number of cities, going through each city only once. This experiment was significant because it showed that information could indeed be processed using the interactions between strands of synthetic DNA.

"He represented each of the seven cities as separate, singlestranded DNA molecules, 20 nucleotides long, and all possible paths between cities as DNA molecules composed of the last ten nucleotides of the departure city and the first ten nucleotides of the arrival city. Mixing the DNA strands with DNA ligase and adenosine triphosphate (ATP) resulted in the generation of all possible random paths through the cities. However, the majority of these paths were not applicable to the situation–they were either too long or too short, or they did not start or finish in the right city. Adleman then filtered out all the paths that neither started nor ended with the correct molecule and those that did not have the correct length and composition. Any remaining DNA molecules represented a solution to the problem"[2].

Here is a simpler rephrasing of the process of selecting only those DNA strands which were applicable: First he used PCR amplification to amplify only strands that start and end at the correct cities, A and G. Then he used gel electrophoresis to filter out all the strands which were not of the correct length (i.e. strands which visited each of seven towns only once would only contain links between six towns). Finally, he used affinity purification to filter out strands which do not visit each town (i.e. any strands which do not contain town A are first discarded, then those which do not contain B, and so on). Any strands left over must represent a valid route. In this case, the only valid route was encoded by the strand ABBCCDDEEFFG: A to B to C to D to E to F to G.

Challenges

Adleman's experiment is an example of an NP-complete problem (from non-polynomial) for which no known efficient (i.e. polynomial time) algorithm exists. It has been proposed that these types of problems are ideal for the massive parallelism of biology. Whereas traditional computers are designed to perform one calculation very fast, DNA strands can produce billions of potential answers simultaneously.

However, because in most difficult problems the number of possible solutions grows exponentially with the size of the problem, even relatively small problems would require massive amounts of DNA to represent all possible answers. For example, it has been estimated that scaling up the Hamilton Path Problem to 200 cities would require a weight of DNA that exceeds that of the earth[3]. For this reason and others, the field has changed focus to other areas.

DNA-based Logic

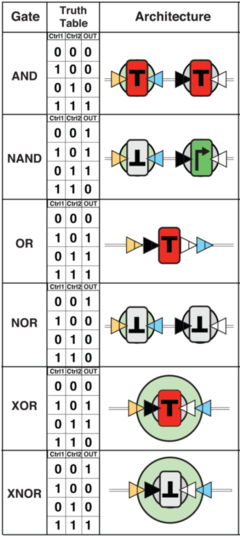

The fundamental building blocks of computing are based on logic circuits or "gates", such as AND, OR, NOT. Using Adleman's work, other researchers have been developing DNA-based logic circuits that are useful in a variety of ways, like performing mathematical and logical operations and recognizing patterns. Progress is even being made in in-cell programming, opening up the doors to, for example, advanced cancer treatments.

Strand Displacement

Perhaps the best-known advances in the field are by a group of researchers at Caltech led by Erik Winfree. In strand-displacement, the inputs are free-floating single strands of DNA or RNA, and logic gates are made from two or more strands, with one being the future output signal. If an input strand has a complementary "tab" to a logic gate, it binds and then displaces the output strand, which then detaches. The output strand then becomes an input to another logic gate, in propagation. In a 2011 paper, Winfree together with colleague Lulu Qian used this technique to build a circuit, made of 74 different DNA strands, capable of calculating the square roots of four-digit binary numbers (aka 0 to 15)[4].

DNAzymes

At Columbia University, Milan Stojanovic and his group have built circuits using a sister to strand displacement based on deoxyribozymes or DNAzymes (synthetically made, single-stranded DNA sequences that cut other DNA strands in specific places). They make logic gates by adding "stem loops" to the DNAzyme, which prevent it from working. When input strands bind to complementary sequences on the loop, the loop breaks, thus allowing the DNAzyme to activate and turning the gate on. It then interacts with other strands in a cascading fashion, allowing the creation of more complex logic. Stojanovic's group used this method to build circuits able to play tic-tac-toe[5].

Enzymes

Logic can also be created using methods that more closely resemble natural operations inside of living cells. A group led by Yaakov Benenson at the Swiss Federal Institute of Technology (ETH Zurich) and Ron Weiss of MIT is creating circuits using common enzymes like polymerases, nucleases and exonucleases that operate inside cells, utilizing existing cellular infrastructure. In 2011, the team created a circuit capable of first recognizing cervical cancer and then destroying the cell it is found within. It works by looking for five microRNAs specific to cervical cancer; when all five are at the right levels, the circuit activates, producing a protein that causes the cell to self-destruct[6].

Algorithmic self-assembly

DNA computing has benefitted from the separate and distinct field of DNA nanotechnology. The founder of the field, American biochemist Nadrian Seeman, has worked with Erik Winfree to show how two-dimensional sheets of DNA "tiles" could self assemble into other, larger structures. Winfree and his student Paul Rothemund then demonstrated how these structures could be used for computing purposes in a 2004 paper that describes the self assembly of a fractal called a Sierpinski gasket, which won them the 2006 Feynman Prize in Nanotechnology. Winfree's major insight was that the tiles could be used as "Wang tiles", meaning the assembly of the structure was capable of computation[7].

Storage

DNA can be treated as a highly stable and compact storage medium. In 2012, George Church, a bioengineer and geneticist at Harvard, succeeded in storing 1000 times the largest data size previously achieved using DNA. Church encoded 700 terabytes of information into a single gram of DNA. For comparison, storing the same amount of information using the densest electronic medium would require 330 pounds of hard-drives.

His methodology was synthesizing 96-bit strands, with each base representing a binary value (ex: T and G = 1, A and C = 0). The "data" in question was 70 billion copies of his latest book, "Regenesis: How Synthetic Biology Will Reinvent Nature and Ourselves"

To read the DNA, Church simply sequenced it using next-generation sequencing, converting each of the bases back into binary. Each strand of DNA had a 19-bit address block at the start, so the DNA can be sequenced out of order and then sorted back into usable data by following the addresses.

iGEM Connection

On March 28, 2013, Drew Endy and his team of researchers at Stanford University published a paper[8] in Science that outlines how to create single-layer, transcriptor-based logic gates utilizing a three-terminal device architecture to control the flow of RNA polymerase along DNA. Endy has open-sourced these transcriptors through the Registry of Standard Biological Parts, and an informational video is available here.

A complementary development was published in Nature (January 2013) by scientist Timothy Lu at MIT that details how to store information about a cell's lifecycle events, like key moments in a cell's ancestry[9].

There is a great animated infographic at the end of this article that helps explain the concepts.

Future Directions

The most popular narrative surrounding the future of DNA computing and DNA-based logic is that one day the technology could be used to program living cells inside our bodies. For example, they could "detect disease, warn of toxic threats and, where danger lurked, even self-destruct cells gone rogue"[10]. To quote Drew Endy: "We're going to be able to put computers inside any living cell you want... Any place you want a little bit of logic, a little bit of computation, a little bit of memory -- we're going to be able to do that"[10]. Whether or not this prediction will come to fruition is up for debate, as the technology has its detractors. For example, many who participated in this year's synthetic biology class (Spring 2013) believed that natural evolutionary processes will always confound the efforts of those trying to bound the behavior of a living cell in vivo. However, no published critiques in the public domain could be found at the time of this writing.